Category Archives: VCenter

vSphere 5.5 Update 2 Released

VMware vCenter Server and ESXi 5.5 Update 2 has just been released with a number of fixes and additional features such as vCenter Server database support for Oracle 12c, Microsoft SQL Server 2012 Service Pack 1, and Microsoft SQL Server 2014 in vCenter Server and support for ESXi hosts with 6TB of RAM in the ESXi 5.5 update 2 release! For the full list, check here.

One particular change was a bit buried in the release notes and was not mentioned in the blog post, which tries to say it’s not a major change. However, for VMware administrators, this might be the most important change in Update 2 and the reason they will upgrade; In Update 2, you will be able to edit virtual machines with hardware version 10 again!

For more details… refer http://blogs.vmware.com/vsphere/2014/09/vsphere-5-5-update-2-ga-available-download.html

Install Emulex CIM Provider in VMware ESXi

As a part of VMware vSphere administration, you all will came across this term “CIM Provider” let see what it is …

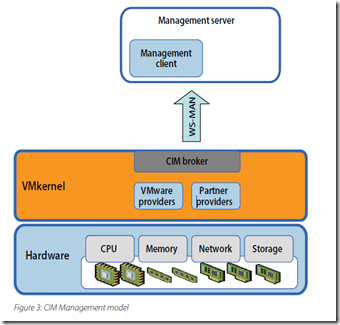

The Common Information Model (CIM) system: CIM is the interface that enables hardware-level management from remote applications via a set of standard APIs.

The CIM is an open standard that defines how computing resources can be represented and managed. It enables a framework for agentless, standards-based monitoring of hardware resources for ESXi. This framework consists of a CIM object manager, often called a CIM broker, and a set of CIM providers. CIM providers are used as the mechanism to provide management access to device drivers and underlying hardware.

Hardware vendors, which include both the server manufacturers and specific hardware device vendors, can write providers to provide monitoring and management of their particular devices. VMware also writes providers that implement monitoring of server hardware, ESX/ESXi storage infrastructure, and virtualization-specific resources. These providers run inside the ESXi system and hence are designed to be extremely lightweight and focused on specific management tasks.

The CIM object manager in ESXi implements a standard CMPI interface developers can use to plug in new providers. The CIM broker takes information from all CIM providers and presents it to the outside world via standard APIs, including WS-MAN (Web Services-Management). Figure 3 shows a diagram of the CIM management model.

So the Hardware vendors like Brocade, HP, DELL, IBM, EMC, Qlogic, Emulex etc will provide these modules and we have to install in the ESX/ESXi hosts.

Now let’s see how we can implement Emulex CIM in the vSphere infra. There are 3 main components in the Emulex Software solution for device management in the vSphere environment.

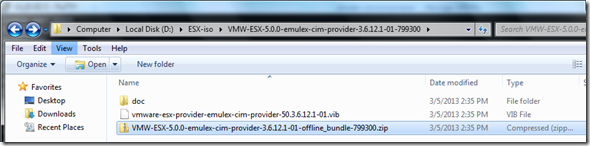

1- Emulex CIM Provider : This we have to install on the ESX/ESXi hosts, it will be available in offline bundle in ZIP format or in the VIB format.

2- Emulex OneCommand Manager (OCM) : This we can install in any windows virtual machine or in the vCenter server itself

OCM for VMware vCenter Server and Emulex CIM provider for ESX/ESXi host is free to download from the Management tab on the following pages.

3- Emulex vCenter server plugin : Once every thing configured and ready, you can see the OCM plugin in the vSphere console and just enable the plugin.

Installation Steps

1- Download the corresponding CIM provider for the ESXi based on your version, in my case it is vSphere 5 update 2 and download the file “CIM Provider Package 3.8.15.1” and extract the ZIP file.

http://www.emulex.com/downloads/emulex/drivers/vmware/

http://www.emulex.com/downloads/emulex/drivers/vmware/vsphere-50/management/

The release notes and user manual are there for more information

2- Upload the offline bundle ZIP file (VMW-ESX-5.0.0-emulex-cim-provider-3.6.12.1-01-offline_bundle-799300.zip) to any of the Datastore via Putty or vSphere Client Datastore browser, to an ESX/ESXi host.

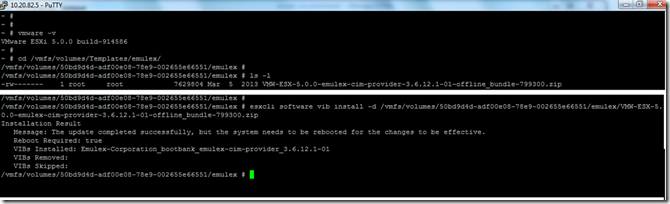

3- Login to the ESXi shell via SSH, and first check the files are copied correctly and their location

~ # cd /vmfs/volumes/Templates/emulex/

/vmfs/volumes/50bd9d4d-adf00e08-78e9-002655e66551/emulex #

/vmfs/volumes/50bd9d4d-adf00e08-78e9-002655e66551/emulex # ls -l

-rw——- 1 root root 7629804 Mar 5 2013 VMW-ESX-5.0.0-emulex-cim-provider-3.6.12.1-01-offline_bundle-799300.zip

/vmfs/volumes/50bd9d4d-adf00e08-78e9-002655e66551/emulex #

4- Now install the offline bundle and you will get a SUCCESS message if it went properly.

/vmfs/volumes/50bd9d4d-adf00e08-78e9-002655e66551/emulex #

/vmfs/volumes/50bd9d4d-adf00e08-78e9-002655e66551/emulex # esxcli software vib install -d /vmfs/volumes/50bd9d4d-adf00e08-78e9-002655e66551/emulex/VMW-ESX-5.0.0-emulex-cim-provider-3.6.12.1-01-offline_bundle-799300.zip

Installation Result

Message: The update completed successfully, but the system needs to be rebooted for the changes to be effective.

Reboot Required: true

VIBs Installed: Emulex-Corporation_bootbank_emulex-cim-provider_3.6.12.1-01

VIBs Removed:

VIBs Skipped:

/vmfs/volumes/50bd9d4d-adf00e08-78e9-002655e66551/emulex #

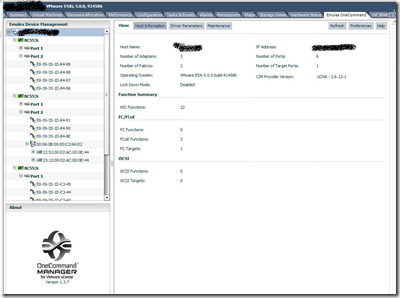

4- Now reboot the ESXi host and you can see the Emulex Hardware details in the vSphere client

ESX/ESXi – Administration TIPS

How to disable or Stop the CIM agent on the ESX/ESXi host ?

Note: The CIM agent is the process providing hardware health information. Disabling this service will disable the hardware health status.

To disable the CIM agent on an ESXi host: Log in to the ESXi shell as the root user.

chkconfig sfcbd-watchdog off

chkconfig sfcbd off

/etc/init.d/sfcbd-watchdog stop

Note: Changing the chkconfig disables the sfcbd service and is persistent across reboots.

To re-enable the CIM agent on the ESXi host, run these commands:

chkconfig sfcbd-watchdog on

chkconfig sfcbd on

/etc/init.d/sfcbd-watchdog start

Note: To check the status of the agent on ESXi, run the below command.

/etc/init.d/sfcbd-watchdog status

For troubleshooting purpose you can use the below commands to restart the CIM related services in ESXi host

#/etc/init.d/wsman restart

#/etc/init.d/sfcbd-watchdog start

Disabling a Single ESXi CIM provider when it fails or is unstable.

To see the CIM providers installed on your ESXi host:

- Log in to the ESXi shell as the root user.

- Run the command:

esxcli system settings advanced list | grep CIM - You see output similar to:

Path: /UserVars/CIMEnabled

Description: Enable or disable the CIM service

Path: /UserVars/CIMLogLevel

Description: Set the log level of the CIM Service

Path: /UserVars/CIMWatchdogInterval

Description: Set the watchdog polling interval for the CIM Service

Path: /UserVars/CIMvmw_sfcbrInteropProviderEnabled

Description: Enable or disable the CIM vmw_sfcbrInterop provider

Path: /UserVars/CIMvmw_hdrProviderEnabled - Disable a CIM provider by running the command:

esxcli system settings advanced set -o /UserVars/CIMProviderName -i 0

Note: To re-enable the CIM provider, run the command:

esxcli system settings advanced set -o /UserVars/CIMProviderName -i 1 - To allow the changes to take effect, restart the SFCBD agent by running the command:

/etc/init.d/sfcbd-watchdog restart

I will post a details blog regarding the use of OneCommand manager

ESXi Storage Device Naming Convention for a LUN : Part-1

This blog post came as a result of my VMware community interaction, so the questions are simple !!

1- What is the need for such a Storage Device Naming Convention for a LUN and the theory behind this.

2- Who is responsible for assigning an Unique Storage Device Name for the LUN in an ESX/ESXi host.

3- For a LUN why you need an unique and same LUN ID across the ESX/ESXi hosts in a cluster.

4- How an ESX/ESXi host can uniquely identify a LUN in a Storage Area Network.

5- What are the different types of naming standards or convention for a LUN in an ESX/ESXi host.

As we all know, for the ESX/ESXi hosts & clusters we have to create/present LUN’s from the Storage Array, to get the features like VMotion,HA,DRS etc. Now let’s see the answers for the above:

1- What is the need for such a naming convention and the theory behind this.

The need for a standard

But here comes a basic problem. If I can expose the same LUN to one or more machines, then how could I address it? In other words, how can safely I distinguish between one LUN and another? This seems to be a really trivial problem. Just stick a a unique GUID to each LUN and you are done! Or, stick a unique number. Or… a string… but hold on, things are not that easy. What if storage array maker ABC assigns GUIDs to each LUN and another vendor assigns 32-bit numbers? We have a complete mess.

To add to the confusion, we have this other concept – the serial number attached to a SCSI disk. But this doesn’t work all the time. For example, some vendors assign a serial number for each LUN, but this serial number is not guaranteed to be unique. Why, some SCSI controllers are even returning the same serial number for all exposed LUNs!

Every hardware vendor had a more-or-less proprietary method to identify LUNs exposed to a system. But if you wanted to write an application that tried to discover all the LUNs you had a hard time since your code was tied to the specific model of each array. What if two vendors had a conflicting way to assign IDs to LUNs? You could end up with two LUNs having the same ID !!

We all know the Storage devices, I/O interfaces, SAS disks are basically used to send and receive Data by using SCSI commands and they all has to follow the SCSI (Small Computer System Interface) standards.

T10 develops standards and technical reports on I/O interfaces, particularly the series of SCSI (Small Computer System Interface) standards. T10 is a Technical Committee of the InterNational Committee on Information Technology Standards (INCITS, pronounced “insights”). INCITS is accredited by, and operates under rules that are approved by, the American National Standards Institute (ANSI).

T10 operates under INCITS and is responsible for setting standards on SCSI Storage Interfaces, SCSI architecture standards (SAM), SCSI command set standards. As per T10, SCSI Primary Commands – 3 (SPC-3) contains the third-generation definition of the basic commands for all SCSI devices. As of now all the major Storage array vendors like EMC, Netapp, HP, DELL, IBM, Hitachi and many others follows these T10 standards, all these arrays follow the SPC-3 standards during the LUN creation, presentation, and communication to the hosts etc. Similarly the ESXi storage stack and other latest Operating systems also uses these standards to communicate to the Storage array, Access the luns etc.

So in short these are industry standards and vendor neutral so that ISV, OEM and other software/hardware vendors can develop solutions and products inside single frame work.

2- Who is responsible for assigning an Unique Storage Device Name for the LUN to an ESX/ESXi host.

There are 2 people assigns and maintain an unique name for a LUN one is the Storage Array and other is the Host, both assigns and maintains at their own level. But what ever luns created and given from a Storage it will be unique, and it is the responsibility of the Storage to maintain the Uniqueness the array uses the T10/SPC-3 standards to maintain the Uniqueness.

That is from the SAN when we create a LUN with a LUN ID the SAN itself make sure it is unique, and we will give LUN name to understand easily. Once that LUN is presented the ESX/ESXi host will make this volume unique with UUID, and in particularly with ESX/ESXi it has different types of multiple naming conventions and representations.

So the Storage array is responsible for this and the ESX/ESXi just uses the LUN, but they follow the guidelines of T10/SPC-3 standards to maintain the Uniqueness.

vCenter Server 5.5.0a Released

After the major vSphere release on september, vmware again released the latest vcenter this month. Even though it is a minor release, lot of bugs fixed in this release.

Issues resolved with this release are as follows

- Attempts to upgrade vCenter Single Sign-On (SSO) 5.1 Update 1 to version 5.5 might fail with error code 1603

- Attempts to log in to the vCenter Server might be unsuccessful after you upgrade from vCenter Server 5.1 to 5.5

- Unable to change the vCenter SSO administrator password on Windows in the vSphere Web Client after you upgrade to vCenter Server 5.5 or VCSA 5.5

- VPXD service might fail due to MS SQL database deadlock for the issues with VPXD queries that run on VPX_EVENT and VPX_EVENT_ARG tables

- Attempts to search the inventory in vCenter Server using vSphere Web Client with proper permissions might fail to return any results

- vCenter Server 5.5 might fail to start after a vCenter Single Sign-On Server reboot

- Unable to log in to vCenter Server Appliance 5.5 using domain credentials in vSphere Web Client with proper permission when the authenticated user is associated with a group name containing parentheses

- Active Directory group users unable to log in to the vCenter Inventory Service 5.5 with vCenter Single Sign-On

- Attempts to log in to vCenter Single Sign-On and vCenter Server might fail when there are multiple users with the same common name in the OpenLDAP directory service

- Attempts to log in to vCenter Single Sign-On and vCenter Server might fail for OpenLDAP 2.4 directory service users who have attributes with multiple values attached to their account

- Attempts to Log in to vCenter Server might fail for an OpenLDAP user whose account is not configured with a universally unique identifier (UUID)

- Unable to add an Open LDAP provider as an identity source if the Base DN does not contain an “dc=” attribute

- Active Directory authentication fails when vCenter Single Sign-On 5.5 runs on Windows Server 2012 and the AD Domain Controller is also on Windows Server 2012

Product Support Notices

For more information on this is available on the Release notes given in the link below

https://www.vmware.com/support/vsphere5/doc/vsphere-vcenter-server-550a-release-notes.html

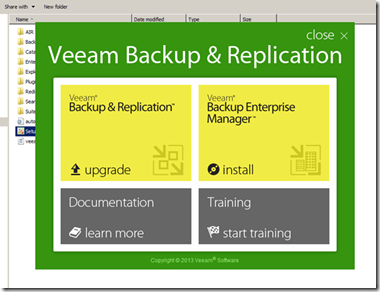

Veeam Upgrade from version 6.5 to 7

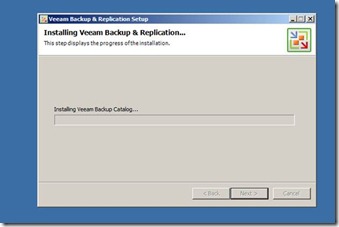

As you all know the pioneer in the Virtualization backup technology, has released their latest version Veeam Backup and Replication 7. This is a major release, there are lot of new features that I will discuss in my next blog. I have just done an upgrade for my client, to be very honest, I really saw the power of Veeam simplicity and easiness. From an administrator and organizational point this matters a lot. The amazing fact is, it took below 20 minutes to upgrade with few mouse clicks !!!

The Steps are given below;

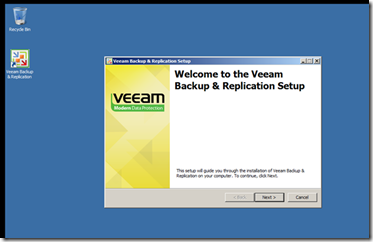

1- Download the latest version, it is in ISO format extract or burn to a CD and mount. Just run the Setup program

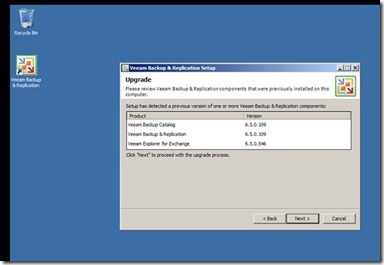

2- Click “Next” it will show you the current version of veeam components and Click “Next”

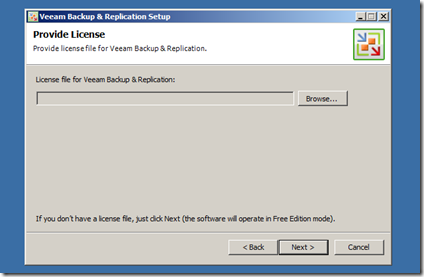

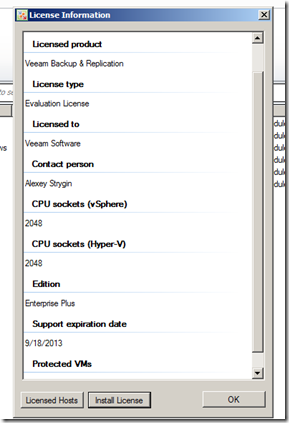

3- Download the license, you have to upgrade your existing 6.5 version to 7, we can apply license at this stage or after completing your upgrade.

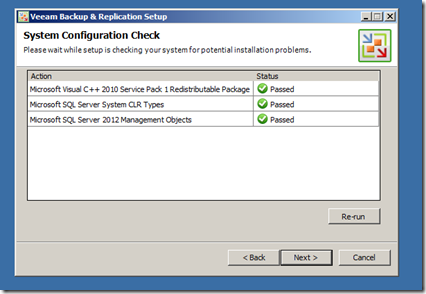

4- It will automatically check the prerequisites, any dependencies will be installed automatically.

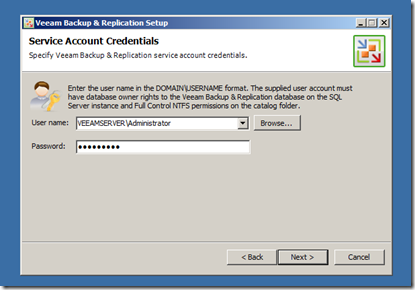

5- Give the local admin or domain credentials, which has full admin access to the veeam sever and its database.

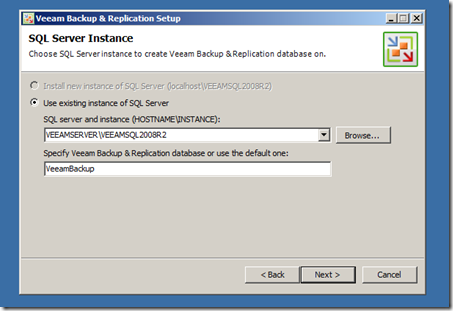

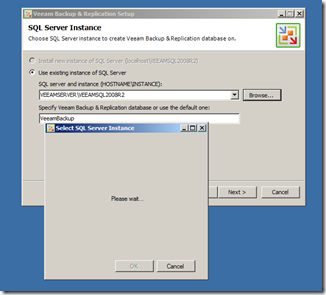

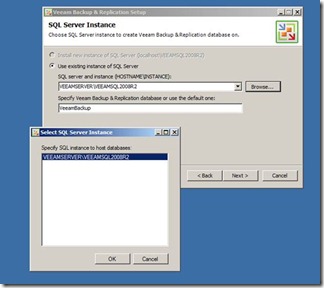

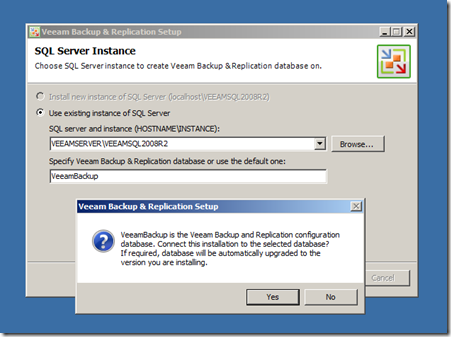

6- Select the MSSQL database instance correctly, after that it will give a warning that “any old database will be automatically updated” This is safe, just click “Yes”

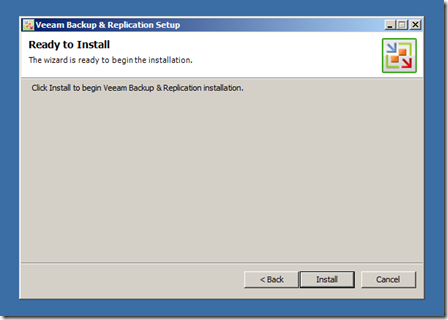

7- Now Click “Install” and Enjoy !! Relax…..

8- That’s it…. Click “Finish” you are done, no need to reboot that’s cool !! But at this stage the upgrade is not completed.

9- Now run the veeam program, its there in the desktop !!

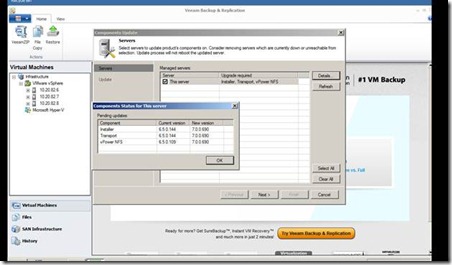

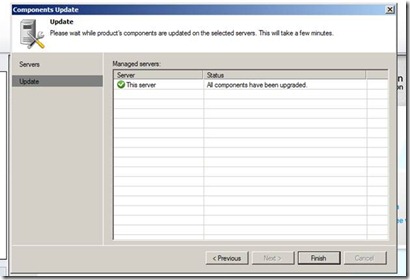

10- Now we have to upgrade the components like, the backup transport module, instant recovery module etc… just click “OK” now its done.

11- Apply the license

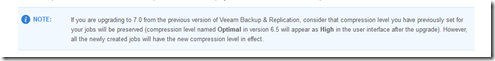

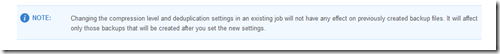

Now you can resume all of your backup operations, just note the below

This is why I really love Veeam, what a cool and easy Stuff. In my next blog, I will discuss the main features and its uses.

VMware vCenter Upgrade from 4.1 to 5.0 : Part-1

There are many ways… to upgrade the vcenter from 4.1 to 5.0, customers have different scenario in their environment, I would like to discuss these common scenarios

OBJECTIVE: Upgrade the vCenter 4.1 to vCenter 5.0

SCENARIO :

A- vCenter 4.1 and Database in the same server, the database is MSSQL 2005 Express edition (bundled MSSQL database, within the vCenter 4.1 DVD)

B- vCenter 4.1 and Database in the same server, the database is MSSQL 2005 Standard or Enterprise.

END RESULT :

A – vCenter 5.0 and Database will be in the same server, the Database will be the same (MSSQL 2005 Express edition ), but the SCHEMA will be upgraded.

B – vCenter 5.0 and Database will be in the same server, the Database will be the same MSSQL 2005 Standard or Enterprise database, but the SCHEMA will be upgraded.

MIGRATION STEPS :

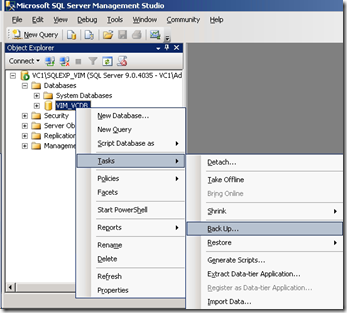

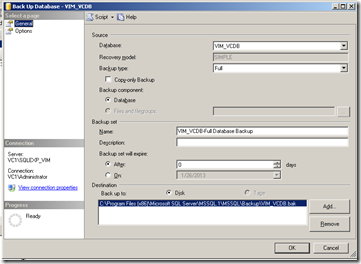

1- Install SQL management studio and Make a full backup of the vCenter Server database.

Click ADD and give the location to save the backup of the database or use defaults.

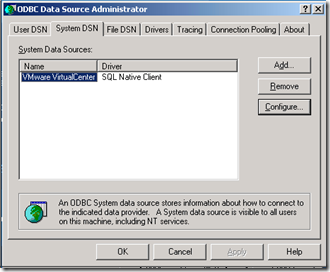

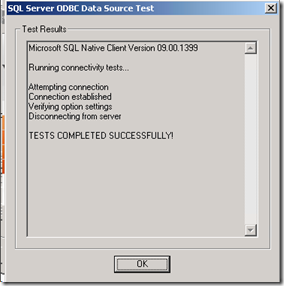

2- Check Existing SYSTEM DSN for the vCenter 4.1 in the OBDC Data Source Administrator, and test the connection settings.

3- Back up the SSL certificates that are on the VirtualCenter or vCenter Server system before you upgrade to vCenter Server 5.0. The default location of the SSL certificates is %allusersprofile%\Application

Data\VMware\VMware VirtualCenter\SSL

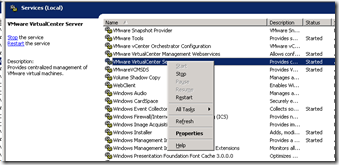

4- Stop the VMware VirtualCenter Server service.

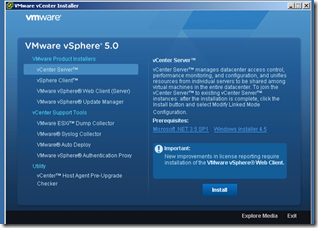

5- From the vSphere 5 DVD or its contents, run the AUTORUN.EXE installer for the vSphere 5 and select to install “vCenter Server” Also ensure all the prerequisites are installed.

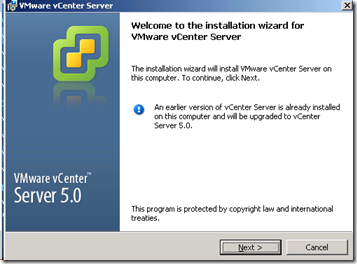

6- The installer wizard will indicate that there is already previous version of vCenter present in the server.Click next and accept the EULA and give the license or later also we can give license.

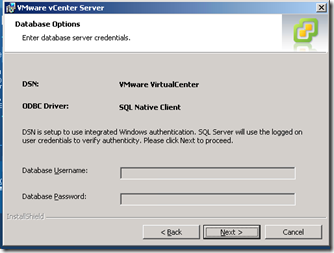

7- The existing DSN will be automatically detected and click next,

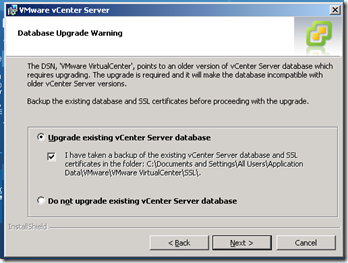

8- Select these options, you need to upgrade the database SCHEMA to accommodate the new vCenter 5.0,

“A dialog box might appear warning you that the DSN points to an older version of a repository that must be upgraded. If you click Yes, the installer upgrades the database schema, making the database irreversibly incompatible with previous VirtualCenter versions. See the vSphere Upgrade documentation”

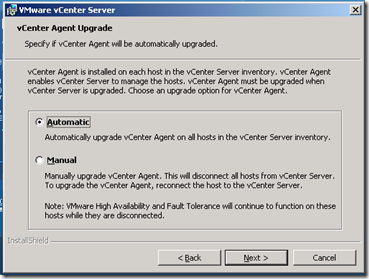

9- We can automatically or manually the vCenter agent in the ESXi hosts, its not a big deal !!!

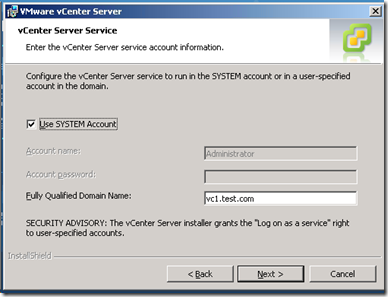

10- Give the credentials and use the FQDN of the old vCenter server, else it will throw an error saying FQDN is not resolved and vCenter feature will not work. This we can neglect, but it is good to give FQDN.

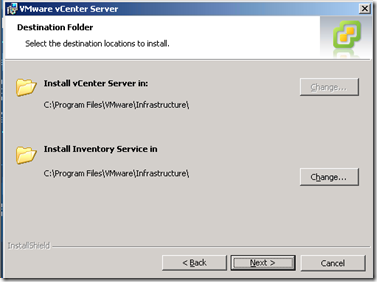

11- Use the default location of the binaries or give the locations if need to customize.

12- It is better to use default ports for the vCenter, else we can change if needed. Rest of the next few steps are self explanatory and choose accordingly

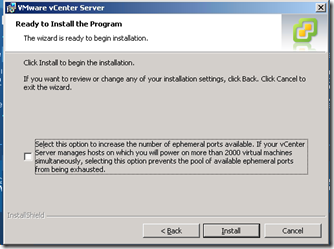

13- If you are using Distributed switch, and large number of portgroups and virtual machines we can select the below option IF YOU ARE USING EPHEMERAL PORTS, BUT IT IS RECOMMEDED TO USE THE STATIC BINDING IN THE vDS so no need to use this option.

14- Click INSTALL button !!!! That’s it…

My next post will be..how to upgrade the vCenter and Database if they are in separate servers.

Magic Effect of SIOC (VMware Storage Input Output Control) in vSphere 5 : A practical study.

Recently I got a chance to implement and test the Enterprise Plus feature in vSphere called SIOC. It is really an amazing feature…..

Theory

Application performance can be impacted when servers contend for I/O resources in a shared storage environment. There is a crucial need for isolating the performance of critical applications from other, less critical workloads by appropriately prioritizing access to shared I/O resources. Storage I/O Control (SIOC), a new feature offered in VMware vSphere 4 & 5, provides a dynamic control mechanism for managing I/O resources across VMs in a cluster.

Datacenters based on VMware’s virtualization products often employ a shared storage infrastructure to service clusters of vSphere hosts. NFS, ISCSI, and Storage area networks (SANs) expose logical storage devices (LUNs) that can be shared across a cluster of ESX hosts. Consolidating VMs’ virtual disks onto a single VMFS datastore, or NFS datastore, backed by a higher number of disks has several advantages—ease of management, better resource utilization, and higher performance (when storage is not bottlenecked).

With vSphere 4.1 we can only use SIOC with FC/ISCSI datastores, but with vSphere 5 we can use NFS.

However, there are instances when a higher than expected number of I/O-intensive VMs that share the same storage device become active at the same time. During this period of peak load, VMs contend with each other for storage resources. In such situations, lack of a control mechanism can lead to performance degradation of the VMs running critical workloads as they compete for storage resources with VMs running less critical workloads.

Storage I/O Control (SIOC), provides a fine-grained storage control mechanism by dynamically allocating portions of hosts’ I/O queues to VMs running on the vSphere hosts based on shares assigned to the VMs. Using SIOC, vSphere administrators can mitigate the performance loss of critical workloads during peak load periods by setting higher I/O priority (by means of disk shares) to those VMs running them. Setting I/O priorities for VMs results in better performance during periods of congestion.

So What is the Advantage for an VM administrator/Organization? Now a days, with vSphere 5 we can have 64TB of single LUN and with high end SAN with FLASH we are achieving more consolidation. That’s great !! but when we do this…. just like the CPU/MEMORY resource pools ensure the Computing SLA, the SIOC ensures the virtual disk STORAGE SLA and its response time.

In short, below are the benefits;

– SIOC prioritizes VMs’ access to shared I/O resources based on disk shares assigned to them. During the periods of I/O congestion, VMs are allowed to use only a fraction of the shared I/O resources in proportion to their relative priority, which is determined by the disk shares.

– If the VMs do not fully utilize their portion of the allocated I/O resources on a shared datastore, SIOC redistributes the unutilized resources to those VMs that need them in proportion to VMs’ disk shares. This results in a fair allocation of storage resources without any loss in their utilization.

– SIOC minimizes the fluctuations in performance of a critical workload during periods of I/O congestion, as much as a 26% performance benefit compared to that in an unmanaged scenario.

How Storage I/O Control Works

SIOC monitors the latency of I/Os to datastores at each ESX host sharing that device. When the average normalized datastore latency exceeds a set threshold (30ms by default), the datastore is considered to be congested, and SIOC kicks in to distribute the available storage resources to virtual machines in proportion to their shares. This is to ensure that low-priority workloads do not monopolize or reduce I/O bandwidth for high-priority workloads. SIOC accomplishes this by throttling back the storage access of the low-priority virtual machines by reducing the number of I/O queue slots available to them. Depending on the mix of virtual machines running on each ESX server and the relative I/O shares they have, SIOC may need to reduce the number of device queue slots that are available on a given ESX server.

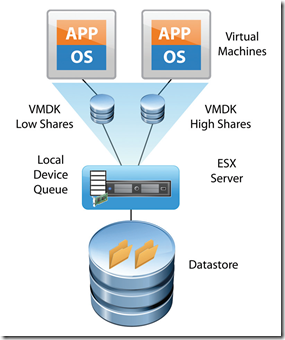

It is important to understand the way queuing works in the VMware virtualized storage stack to have a clear understanding of how SIOC functions. SIOC leverages the existing host device queue to control I/O prioritization. Prior to vSphere 4.1, the ESX server device queues were static and virtual-machine storage access was controlled within the context of the storage traffic on a single ESX server host. With vSphere 4.1 & 5, SIOC provides datastore-wide disk scheduling that responds to congestion at the array, not just on the hostside HBA.. This provides an ability to monitor and dynamically modify the size of the device queues of each ESX server based on storage traffic and the priorities of all the virtual machines accessing the shared datastore. An example of a local host-level disk scheduler is as follows:

Figure 1 shows the local scheduler influencing ESX host-level prioritization as two virtual machines are running on the same ESX server with a single virtual disk on each.

Figure 1. I/O Shares for Two Virtual Machines on a Single ESX Server (Host-Level Disk Scheduler)

In the case in which I/O shares for the virtual disks (VMDKs) of each of those virtual machines are set to different values, it is the local scheduler that prioritizes the I/O traffic only in case the local HBA becomes congested. This described host-level capability has existed for several years in ESX Server prior to vSphere 4.1 & 5. It is this local-host level disk scheduler that also enforces the limits set for a given virtual-machine disk. If a limit is set for a given VMDK, the I/O will be controlled by the local disk scheduler so as to not exceed the defined amount of I/O per second.

vSphere 4.1 onwards it has added two key capabilities: (1) the enforcement of I/O prioritization across all ESX servers that share a common datastore, and (2) detection of array-side bottlenecks. These are accomplished by way of a datastore-wide distributed disk scheduler that uses I/O shares per virtual machine to determine whether device queues need to be throttled back on a given ESX server to allow a higher-priority workload to get better performance.

The datastore-wide disk scheduler totals up the disk shares for all the VMDKs that a virtual machine has on the given datastore. The scheduler then calculates what percentage of the shares the virtual machine has compared to the total number of shares of all the virtual machines running on the datastore. As described before, SIOC engages only after a certain device-level latency is detected on the datastore. Once engaged, it begins to assign fewer I/O queue slots to virtual machines with lower shares and more I/O queue slots to virtual machines with higher shares. It throttles back the I/O for the lower-priority virtual machines, those with fewer shares, in exchange for the higher-priority virtual machines getting more access to issue I/O traffic.

However, it is important to understand that the maximum number of I/O queue slots that can be used by the virtual machines on a given host cannot exceed the maximum device-queue depth for the device queue

of that ESX host.

What are the conditions required for the SIOC to work ?

– A large datastore, and lot of VMs in it and this datastore is shared between multiple ESX hosts.

– Need to set disk shares, for the vm’s inside the datastore.

– Based on the LUN/NFS type (made of SSD,SAS,SATA), select the SIOC threshold, and enable SIOC.

– The SIOC will monitor the datastore IOPS usage, latency of VM’s and monitor overall STORAGE Array performance/Latency.

SIOC don’t check the below;

How much Latency created by other physical systems to the array, that is the Array is shared for the PHYSICAL hosts also, and other backup applications/jobs etc. So it gives false ALARMS like “VMware vCenter – Alarm Non-VI workload detected on the datastore] An unmanaged I/O workload is detected on a SIOC-enabled datastore”

Tricky Question – Will it work for one ESXi host ? Simple answer – SIOC is for multiple ESXi hosts, Datastore is exposed to many hosts and in that Datastore contains a lot of VM’s. For single host also we can enable the SIOC if we have the license, but it is not intended for this CASE.

So what is the solution for a single ESXi host ? – Simple just use shares for the VMDK and the ESXi host use Host-Level Disk Scheduler and ensures the DISK SLA.

So is there any real advantage of the SIOC in Real time scenario ? the below experience of me PROVES this..

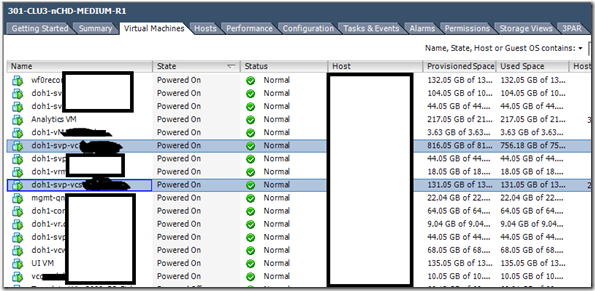

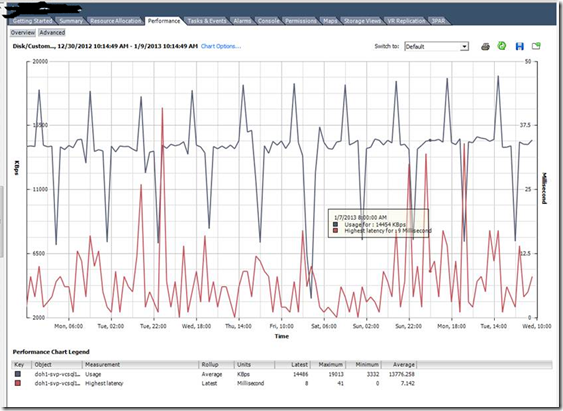

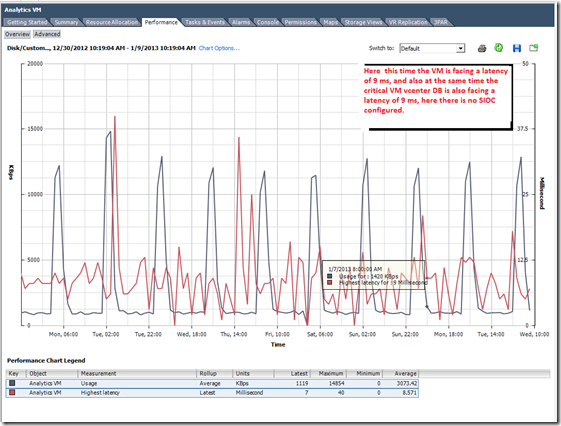

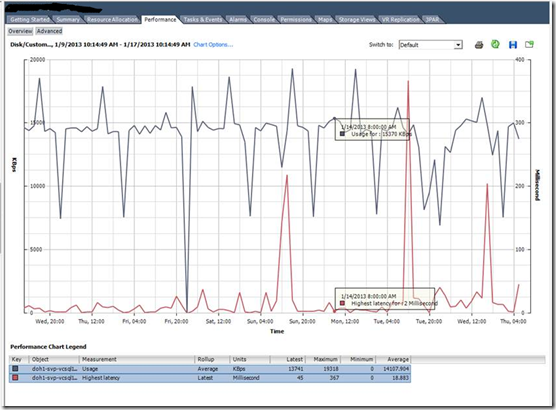

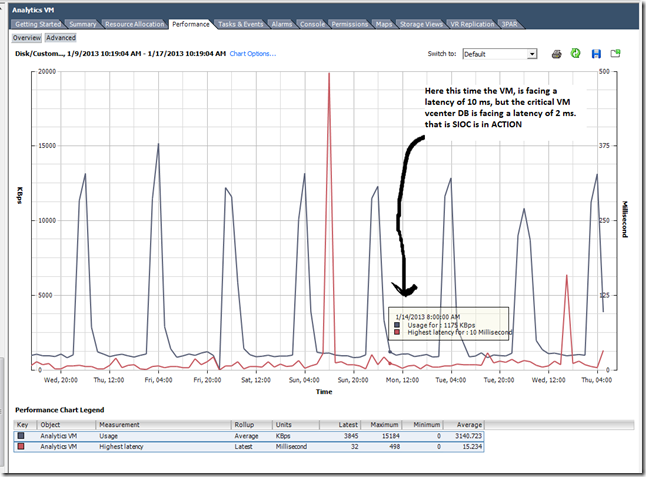

My scenario: I have 4 ESXi 5 hosts in the cluster, and many FC LUNS from the HP 3PAR storage arrays is mounted. In one or the LUN we have put around 16 VMs and this includes our vCenter and it Database, and inside that Datastore we have a less critical VM (Analytics engine, which is from the vCOPS appliance) and this consumes a lot of IOPS from the storage and eventually effects other critical VM. We enabled the SIOC and monitored for 10 days then we compared the performance of the VMDK and its LATENCY during the PEAK hours.

Below is the Datastore with many VMS and it is shared across 4 HOSTS.

With SIOC disabled.

With SIOC enabled.

So what is the Take away with SIOC ?

Better I/O response time, reduced latency for critical VMS and ensured disk SLA for critical VM’s. So bottom line is the crtical VM’s wont get affected during the event of storage contention and in highly consolidated environment.

REFERENCES:

http://blogs.vmware.com/vsphere/2012/03/debunking-storage-io-control-myths.html

http://blogs.vmware.com/vsphere/2011/09/storage-io-control-enhancements.html

http://www.vmware.com/files/pdf/techpaper/VMW-vSphere41-SIOC.pdf