Multi-NIC vMotion Speed and Performance in vSphere 5.x – Optimum bandwidth allocation

During my recent implementation of vSphere 5 for my client, I Tested few cases regarding VMotion speed and its Network bandwidth utilization…

Very interesting results, I got…

Test Environment Specifications

Hardware

a) Enclosure BladeSystem c7000 Enclosure G2 b) ProLiant BL680c G7 Blade c) HP VC FlexFabric 10Gb/24-Port Module

d) HP NC553i 10Gb 2-port FlexFabric Converged Network Adapter (Emulex OneConnect OCe111000 10GbE, FCoE UCNA)

e) Brocade BR-DCX4S-0001-A, DCX-4S Backbone Fibre Channel Switch f) HP 3PAR T400 Storage System

g) CPU – Intel Xeon CPU E7-4820, 2GHz h) RAM – 512 GbFeatures to Test

Software

a) VMware VSphere 5 Enterprise Plus license b) VMware ESXi 5.0.0 build-623860 c) VMware VCenter 5 Standard

Test Input

a) A Windows 2008 R2 64 bit virtual machine, with 2 vCPUS and 4GB RAM b) A Windows 2008 R2 64 bit virtual machine, with 2 vCPUS and 8GB RAM

c) A Windows 2008 R2 64 bit virtual machine, with 2 vCPUS and 16GB RAM d) A Windows 2008 R2 64 bit virtual machine, with 2 vCPUS and 32GB RAM

e) VMware Standard Switch, with 2 x 1GbE PNIC uplinks (CASE-1) f) VMware Standard Switch, with 2 x 2GbE PNIC uplinks (CASE-2)

g) VMware Distributed Switch, with 2 x 2GbE PNIC uplinks (CASE-3)

Test Procedure

Steps:-

1, Configure the Multinic VMotion on the ESXi hosts, as per the link http://kb.vmware.com/selfservice/microsites/search.do?language=en_US&cmd=displayKC&externalId=2007467

2, In the HP VC, set the speed of the PNICS as 1GbE and do VMotion for 3 virtual machine from one host to another. (CASE-1)

3, In the HP VC, set the speed of the PNICS as 2GbE and do VMotion for 3 virtual machine from one host to another. (CASE-2)

4, Configure Vmware Distributed Switch and Multinic VMotion

5, Perform VMotion for 3 virtual machine from one host to another. (CASE-3)

Normally in a ESXi cluster there will be multiple VMotion happening in background or if we want to do a ESXi host maintenance, in these situations if the entire VMotion is very fast then the ESXi host will enter in to the maintenance mode very fast. Also for monster vm’s with 64GB/32GB RAM this will be a great relief during the live migration.

Now the tricky part…. what is the pNIC link speed… is it 1GbE or 10GbE or in between or what should be the pNICS speed we need to use. There are 3 cases we can consider…

1 – in conventional server adapters we get 1GbE pNICS

2 – when we use 10GbE cards, here we normally use NetIOC to partition the cards for ESX traffics like VMotion, FT, Management, VM traffic etc.. and we set policy, and bandwidth for these traffic.

3- When we use HP-Blades and use FlexNics, FlexFabric Converged Network Adapter, and FlexNetworking in the HP Virtual Connect.

From my understanding and Googling !!!… below are the findings.

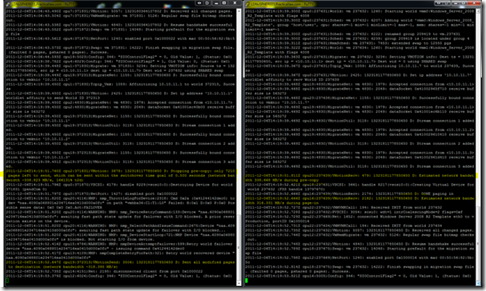

Issue the command tail -f /var/log/vmkernel.log and than initiate a VMotion. You should get info like this:

Below is the result I got with the pNICS of 1GbE.

VMotion with 1G speed (vSS)

2012-07-15T04:31:29.171Z cpu54:9720)Config: 346: “SIOControlFlag2” = 1, Old Value: 0, (Status: 0x0)

2012-07-15T04:31:31.306Z cpu0:16528)Migrate: vm 16529: 3234: Setting VMOTION info: Source ts = 1342326689209133, src ip = <2.2.2.1> dest ip = <2.2.2.3> Dest wid = 152453 using SHARED swap

2012-07-15T04:31:31.309Z cpu0:16528)Tcpip_Vmk: 1059: Affinitizing 2.2.2.1 to world 155824, Success

2012-07-15T04:31:31.309Z cpu0:16528)VMotion: 2425: 1342326689209133 S: Set ip address ‘2.2.2.1’ worldlet affinity to send World ID 155824

2012-07-15T04:31:31.310Z cpu0:155824)MigrateNet: 1158: 1342326689209133 S: Successfully bound connection to vmknic ‘2.2.2.1’

2012-07-15T04:31:31.311Z cpu0:155824)MigrateNet: 1158: 1342326689209133 S: Successfully bound connection to vmknic ‘2.2.2.1’

2012-07-15T04:31:31.311Z cpu2:8992)MigrateNet: vm 8992: 1982: Accepted connection from <2.2.2.3>

2012-07-15T04:31:31.311Z cpu2:8992)MigrateNet: vm 8992: 2052: dataSocket 0x4100368ec610 receive buffer size is 563272

2012-07-15T04:31:31.311Z cpu0:155824)VMotionUtil: 3087: 1342326689209133 S: Stream connection 1 added.

2012-07-15T04:31:31.311Z cpu0:155824)MigrateNet: 1158: 1342326689209133 S: Successfully bound connection to vmknic ‘2.2.2.2’

2012-07-15T04:31:31.312Z cpu0:155824)VMotionUtil: 3087: 1342326689209133 S: Stream connection 2 added.

2012-07-15T04:31:39.081Z cpu4:16529)VMotion: 3878: 1342326689209133 S: Stopping pre-copy: only 2280 pages left to send, which can be sent within the switchover time goal of 0.500 seconds (network bandwidth ~175.458 MB/s, 955747% t2d)

2012-07-15T04:31:39.129Z cpu6:16529)NetPort: 1427: disabled port 0x100000b

2012-07-15T04:31:39.129Z cpu6:16688)VSCSI: 6226: handle 8199(vscsi0:0):Destroying Device for world 16529 (pendCom 0)

2012-07-15T04:31:39.405Z cpu46:155824)VMotionSend: 3508: 1342326689209133 S: Sent all modified pages to destination (network bandwidth ~234.162 MB/s)

2012-07-15T04:31:39.649Z cpu30:8287)Net: 2195: disconnected client from port 0x100000b

Below is the result I got with the pNICS of 2GbE.

Vmotion with 2G speed (vSS)

2012-07-15T13:30:18.467Z cpu10:11400)Migrate: vm 11401: 3234: Setting VMOTION info: Source ts = 1342359016928867, src ip = <2.2.2.1> dest ip = <2.2.2.3> Dest wid = 21083 using SHARED swap

2012-07-15T13:30:18.470Z cpu59:12009)MigrateNet: 1158: 1342359016928867 S: Successfully bound connection to vmknic ‘2.2.2.1’

2012-07-15T13:30:18.471Z cpu10:11400)Tcpip_Vmk: 1059: Affinitizing 2.2.2.1 to world 12009, Success

2012-07-15T13:30:18.471Z cpu10:11400)VMotion: 2425: 1342359016928867 S: Set ip address ‘2.2.2.1’ worldlet affinity to send World ID 12009

2012-07-15T13:30:18.471Z cpu37:8992)MigrateNet: vm 8992: 1982: Accepted connection from <2.2.2.3>

2012-07-15T13:30:18.471Z cpu37:8992)MigrateNet: vm 8992: 2052: dataSocket 0x410036859910 receive buffer size is 563272

2012-07-15T13:30:18.471Z cpu63:8255)NetSched: 4357: hol queue 3 reserved for fifo scheduler on port 0x6000002

2012-07-15T13:30:18.472Z cpu59:12009)MigrateNet: 1158: 1342359016928867 S: Successfully bound connection to vmknic ‘2.2.2.1’

2012-07-15T13:30:18.472Z cpu59:12009)VMotionUtil: 3087: 1342359016928867 S: Stream connection 1 added.

2012-07-15T13:30:18.472Z cpu59:12009)MigrateNet: 1158: 1342359016928867 S: Successfully bound connection to vmknic ‘2.2.2.2’

2012-07-15T13:30:18.472Z cpu59:12009)VMotionUtil: 3087: 1342359016928867 S: Stream connection 2 added.

2012-07-15T13:30:21.838Z cpu12:11401)VMotion: 3878: 1342359016928867 S: Stopping pre-copy: only 1476 pages left to send, which can be sent within the switchover time goal of 0.500 seconds (network bandwidth ~455.910 MB/s, 967822% t2d)

2012-07-15T13:30:21.862Z cpu12:11401)NetPort: 1427: disabled port 0x1000008

2012-07-15T13:30:21.863Z cpu12:11648)VSCSI: 6226: handle 8196(vscsi0:0):Destroying Device for world 11401 (pendCom 0)

2012-07-15T13:30:22.014Z cpu62:12009)VMotionSend: 3508: 1342359016928867 S: Sent all modified pages to destination (network bandwidth ~442.297 MB/s)

2012-07-15T13:30:22.332Z cpu16:8287)Net: 2195: disconnected client from port 0x1000008

Below is the result I got with the pNICS of 2GbE.

Vmotion with 2G speed (vDS)

2012-08-05T09:23:11.960Z cpu12:10134)Config: 346: “SIOControlFlag2” = 1, Old Value: 0, (Status: 0x0)

2012-08-05T09:23:12.857Z cpu0:207996)Migrate: vm 207997: 3234: Setting VMOTION info: Source ts = 1344158592033676, src ip = <2.2.2.11> dest ip = <2.2.2.13> Dest wid = 208920 using SHARED swap

2012-08-05T09:23:16.857Z cpu8:207997)VMotion: 3878: 1344158592033676 S: Stopping pre-copy: only 1754 pages left to send, which can be sent within the switchover time goal of 0.500 seconds (network bandwidth ~310.281 MB/s, 444548% t2d)

2012-08-05T09:23:17.053Z cpu50:209304)VMotionSend: 3508: 1344158592033676 S: Sent all modified pages to destination (network bandwidth ~413.803 MB/s)

2012-08-05T09:23:22.022Z cpu58:10132)Config: 346: “SIOControlFlag2” = 1, Old Value: 0, (Status: 0x0)

2012-08-05T09:23:23.685Z cpu13:208163)Migrate: vm 208164: 3234: Setting VMOTION info: Source ts = 1344158602063839, src ip = <2.2.2.11> dest ip = <2.2.2.13> Dest wid = 209100 using SHARED swap

2012-08-05T09:23:31.213Z cpu14:208164)VMotion: 3878: 1344158602063839 S: Stopping pre-copy: only 1468 pages left to send, which can be sent within the switchover time goal of 0.500 seconds (network bandwidth ~279.111 MB/s, 8217349% t2d)

2012-08-05T09:23:31.525Z cpu32:209313)VMotionSend: 3508: 1344158602063839 S: Sent all modified pages to destination (network bandwidth ~299.067 MB/s)

2012-08-05T09:23:22.022Z cpu58:10132)Config: 346: “SIOControlFlag2” = 1, Old Value: 0, (Status: 0x0)

2012-08-05T09:23:37.105Z cpu4:208338)Migrate: vm 208339: 3234: Setting VMOTION info: Source ts = 1344158615837668, src ip = <2.2.2.11> dest ip = <2.2.2.13> Dest wid = 209303 using SHARED swap

2012-08-05T09:23:58.429Z cpu8:208339)VMotion: 3878: 1344158615837668 S: Stopping pre-copy: only 2530 pages left to send, which can be sent within the switchover time goal of 0.500 seconds (network bandwidth ~360.240 MB/s, 1768884% t2d)

2012-08-05T09:23:58.769Z cpu50:209505)VMotionSend: 3508: 1344158615837668 S: Sent all modified pages to destination (network bandwidth ~302.745 MB/s)

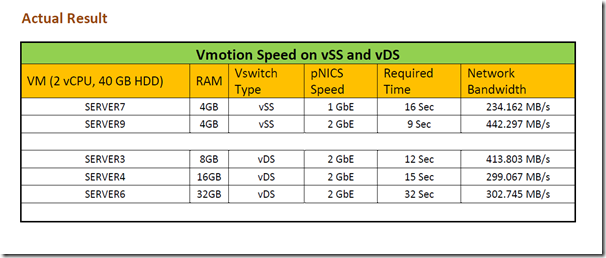

with the above results, for 1GbE we may get a speed below 250 MB/s to 350 MB/s and if it is 2GbE and up to 10GbE we may get a speed below 600 MB/s

So why we are not Getting the FULL bandwidth of the pNICS, and why the VMotion is not using the FULL 1GbE link or above….????

During VMotion the ESXi 5.x will check the Link speed of the PNICS attached to the VSwitch, based on this it will adjust the receive buffer size up to 550 KB (550 KB is constant and hardcoded), the maximum theoretically we can get a maximum of up to 600 MB/sec. And in ESX/ESXi 4.x VMotion is using a “buffer size of 263536” which is 256 KB; I didn’t tested in vSphere 4.x

As we all know the configuration maximum, we can do a 4 VMotion if it uses 1GbE PNICS, and we can do 8 VMotion if it uses 10GbE PNICS. So with 1GbE we get around 300 MB/S so we can have 4 concurrent VMotions, and with 2GbE and above we will get only up to 600 MB/Sec so we can have 8 VMotion, its simple MATH !!

VMware may be coded in this way and so my be this could be the reason we are not getting the Bandwidth of the pNICS and there is relation between the BUFFER SIZE & LINK SPEED and final Transfer rate.

In short even though if we give…10GbE or use 10GbE pNICS, VMotion wont use that full capacity, only the amount of concurrent VMotions we can perform, will increase.

So what is the ADVANTAGE of giving the pNIC bandwidth greater than 1Gb

Because of the increase in buffer size and the use of Multi-Nic VMotion in vSphere we get a better utilization of pNICS, as the VMotion traffic distributed across the all the pNICS. So from the above TEST, I believe when we use 10GbE, CNA and FlexNics, we need to give VMotion network a 2GB link speed. This will improve the VMotion throughput and will take less time to complete and number of concurrent VMotions will increase.

Moreover in a well balanced ESX cluster and if the cluster is not over subscribed there will be very few VMotions happening inside the CLUSTER. So giving a dedicated 10GbE card is a WASTE.

So the OPTIMUM LINK SPEED and BANDWIDTH allocation for VMotion network is 2GbE

Posted on January 12, 2013, in Uncategorized. Bookmark the permalink. 1 Comment.

![clip_image002[4] clip_image002[4]](https://pibytes.files.wordpress.com/2013/01/clip_image0024_thumb.jpg?w=505&h=110)

![clip_image002[6] clip_image002[6]](https://pibytes.files.wordpress.com/2013/01/clip_image0026_thumb.jpg?w=695&h=149)

Number of concurrent vmotions is based on detected link speed. if the physical NIC reports less than 10GBe, vCenter allows a maximum of 4 concurrent vMotions on that host.

It’s an Good attempt.