Category Archives: Dell Storage

Deduplication Internals – Source Side & Target Side Deduplication: Part-4

In Continuation of my deduplication series Part 1, Part 2, Part 3, in this part we will discuss where the deduplication happens. Based on where the dedupe process is happening, there is 2 types – That is Source Side or Client side and Target side. These are widely used in the Backup Technology, that is backup software and hardware appliances. Like IBM Tivoli TSM, Symantec backup Exec, EMC Avamar, Netbackup etc.

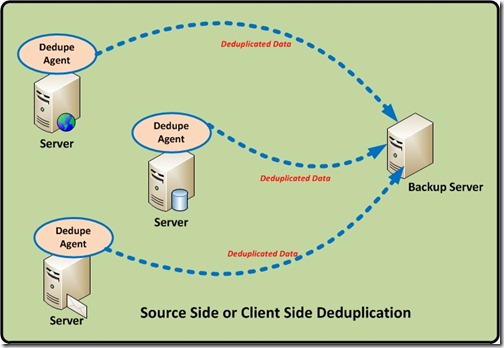

Source or Client Side Deduplication.

From the Name itself we can say, the entire deduplication is happening on the Client (Servers), there will be dedicated deduplication agents installed in the servers and these agents use the CPU/RAM of the server to perform the deduplication. This thereby distributing the deduplication processing overhead across multiple systems.

Source-side deduplication typically uses a client-located deduplication engine that will check for duplicates against a centrally-located deduplication index, typically located on the backup server or media server. Only unique blocks will be transmitted to the disk. So less data to transfer so the backup window is also get reduced.

Source-based deduplication processes the data before it goes over the network. This reduces the amount of data that must be transmitted, which can be important in environments with constrained bandwidth. That is less network bandwidth needed for the backup software.

This method is often used in situations where remote offices must back up data to the primary data center. In this case, deduplication becomes the responsibility of the client server.

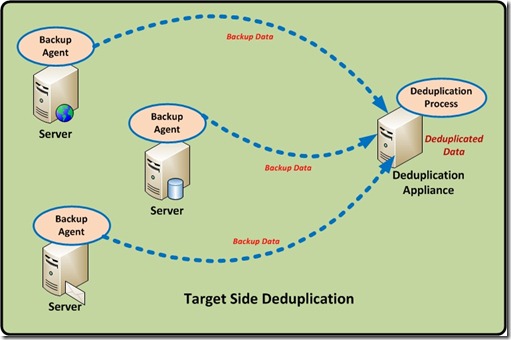

Target Side Deduplication.

Target-based deduplication requires that the target backup server or a dedicated Hardware target dedupe appliance handles all of the deduplication. This means no overhead on the client or server being backed up. This solution is transparent to existing workflows, so it creates minimal disruption. However, it requires more network resources because the original data, with all its redundancy, must go over the network.

So a target deduplication solution is a purpose built appliance, so the hardware and software stack are tuned to deliver optimal performance, so there is no additional CPU/RAM resource usage in the client servers.

Dell DR4000, Symantec NetBackup PureDisk, Symantec NetBackup 5220, EMC Avamar, HP StoreOnce, EMC’s Data Domain, ExaGrid Systems’ DeltaZone and NEC’s Hydrastor, IBM’s ProtecTier and Sepaton’s DeltaStor, Quantum DXi are the main examples which uses this type of deduplication.

My next post will be about the Inline and Post processing deduplication………!! Stay Tuned !!!

Deduplication Internals – Hash based deduplication : Part-2

Now in the second part we discuss about the process or technique used to do the deduplication.

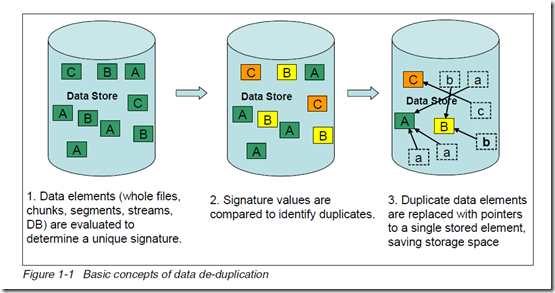

Different data de-duplication products use different methods of breaking up the data into elements or chunks or blocks, but each product uses some technique to create a signature or identifier or fingerprint for each data element. As shown in the below figure, the data store contains the three unique data elements A, B, and C with a distinct signature. These data element signature values are compared to identify duplicate data. After the duplicate data is identified, one copy of each element is retained, pointers are created for the duplicate items, and the duplicate items are not stored.

The basic concepts of data de-duplication are illustrated in below.

Based on the Technologies, or how how it is done. Two methods frequently used used for de-duplicating data are hash based and content aware.

1 – Hash based Deduplication

Hash based data de-duplication methods use a hashing algorithm to identify “chunks” of data. Commonly used algorithms are Secure Hash Algorithm 1 (SHA-1) and Message-Digest

Algorithm 5 (MD5). When data is processed by a hashing algorithm, a hash is created that represents the data. A hash is a bit string (128 bits for MD5 and 160 bits for SHA-1) that

represents the data processed. If you processed the same data through the hashing algorithm multiple times, the same hash is created each time.

Here are some examples of hash codes:

MD5 – 16 byte long hash

– # echo “The Quick Brown Fox Jumps Over the Lazy Dog” | md5sum

9d56076597de1aeb532727f7f681bcb0

– # echo “The Quick Brown Fox Dumps Over the Lazy Dog” | md5sum

5800fccb352352308b02d442170b039d

SHA-1 – 20 byte long hash

– # echo “The Quick Brown Fox Jumps Over the Lazy Dog” | sha1sum

F68f38ee07e310fd263c9c491273d81963fbff35

– # echo “The Quick Brown Fox Dumps Over the Lazy Dog” | sha1sum

d4e6aa9ab83076e8b8a21930cc1fb8b5e5ba2335

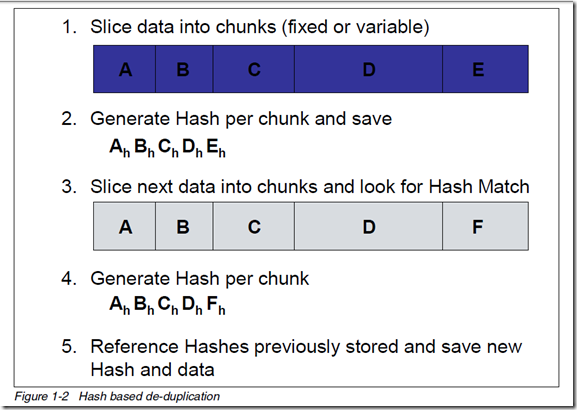

Hash based de-duplication breaks data into “chunks”, either fixed or variable length, and processes the “chunk” with the hashing algorithm to create a hash. If the hash already exists, the data is deemed to be a duplicate and is not stored. If the hash does not exist, then the data is stored and the hash index is updated with the new hash.

In Figure 1-2, data “chunks” A, B, C, D, and E are processed by the hash algorithm and creates hashes Ah, Bh, Ch, Dh, and Eh; for purposes of this example, we assume this is all new data.

Later, “chunks” A, B, C, D, and F are processed. F generates a new hash Fh. Since A, B, C, and D generated the same hash, the data is presumed to be the same data, so it is not stored again. Since F generates a new hash, the new hash and new data are stored.

A – Fixed-Length or Fixed Block

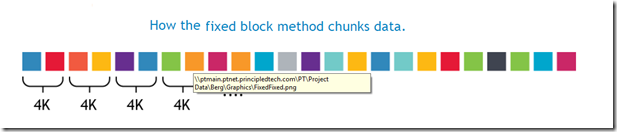

In this data deduplication algorithm, it breaks the Data in to chunks or block, and the block size or block boundaries is Fixed like 4KB, or 8KB etc. And the block size never changes. While different devices/solutions may use different block sizes, the block size for a given device/solution using this method remains constant.

The device/solution always calculates a fingerprint or signature on a fixed block and sees if there is a match. After a block is processed, it advances by exactly the same size and take another block and the process repeats.

Advantages

Requires the minimum CPU overhead, and fast and simple

Disadvantages

Because the block size or block boundaries is Fixed, the main limitation of this approach is that when the data inside a file is shifted, for example, when adding a slide to a Microsoft PowerPoint deck, all subsequent blocks in the file will be rewritten and are likely to be considered as different from those in the original file. Smaller block size give better deduplication than large ones, but it takes more processing to deduplicate. Larger block size give low depulication, but it takes less processing to deduplicate.

So the Bottom line is Less storage savings and not efficient.

B – Variable-Length or Variable Block

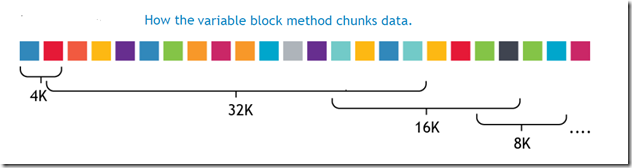

In this data deduplication algorithm, it breaks the Data in to chunks or block, and the block size or block boundaries is variable like 4KB, or 8KB or 16KB etc. And the block size changes dynamically during the entire process. The device/solution always calculates a fingerprint or signature on a variable block size and sees if there is a match. After a block is processed, it advances by taking another block size and take another blocks and the process repeats.

Advantages

Higher deduplication ratio, high storage space savings.

Disadvantages

While the variable block deduplication may yield slightly better deduplication than the fixed block deduplication approach, it does require you to pay a price. The price being the CPU cycles that must be spent in trying to determine the file boundaries. The variable block approach requires more processing than fixed block because the whole file must be scanned, one byte at a time, to identify block boundaries.

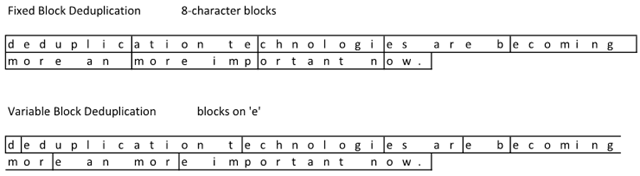

Check out the following example based on the following sentence will explain in detail: “deduplication technologies are becoming more an more important now.”

Notice how the variable block deduplication has some funky block sizes. While this does not look too efficient compared to fixed block, check out what happens when I make a correction to the sentence. Oops… it looks like I used ‘an’ when it should have been ‘and’. Time to change the file: “deduplication technologies are becoming more and more important now.” File –> Save

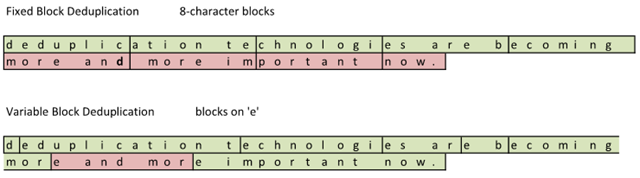

After the file was changed and deduplicated, this is what the storage subsystem saw:

The red sections represent the changed blocks that have changed. By adding a single character in the sentence, a ‘d’, the sentence length shifted and more blocks suddenly changed. The Fixed Block solution saw 4 out of 9 blocks changed. The Variable Block solution saw 1 out of 9 blocks changed.

Variable block deduplication ends up providing a higher storage density and good storage space savings.

2 – Content Aware or application-aware Deduplication

My next blog will be about the content aware dedupe.

Deduplication Internals : Part-1

Deduplication is one of the hottest technologies in the current market because of its ability to reduce costs. But it comes in many flavours and organizations need to understand each one of them if they are to choose the one that is best for them. Deduplication can be applied to data in primary storage, backup storage, cloud storage or data in flight for replication, such as LAN and WAN transfers. So eventually it offers the below benefits;

– Allow to substantially save disk space, reduce storage requirements and Less hardware

– Improve bandwidth efficiency,

– Improve replication speed,

– Reduce Backup window and improve RTO and RPO objectives,

– and finally COST.

What is data deduplication?

This concept is a familiar one which we see daily, a URL is a type of pointer; when someone shares a video on YouTube, they send the URL for the video instead of the video itself. There’s only one copy of the video, but it’s available to everyone. Deduplication uses this concept in a more sophisticated, automated way.

Data deduplication is a technique to reduce storage needs by eliminating redundant or duplicate data in your storage environment. Only one and unique copy of the data is retained on storage media, and redundant or duplicate data is replaced with a pointer to the unique data copy.

That is, It looks at the data on a sub-file (i.e.block) level, and attempts to determine if it’s seen the data before. If it hasn’t, it stores it. If it has seen it before, it ensures that it is stored only once, and all other references to that duplicate data are merely pointers.

How data deduplication works?

Dedupe technology typically divides data in to smaller chunks/blocks and uses algorithms to assign each data chunk a unique hash identifier called a fingerprint to each chunks/blocks. To create the fingerprint, it uses an algorithm that computes a cryptographic hash value from the data chunks/blocks, regardless of the data type. These fingerprints are stored in an index.

The deduplication algorithm compares the fingerprints of data chunk/block to those already in the index. If the fingerprint exists in the index, the data chunk/block is replaced with a pointer to data chunk/block. If the fingerprint does not exist, the data is written to the disk as a new unique data chunk.

Different types of de-duplication – There are many types and broad classification of dedupe methods; they are

1- Based on the Technologies, how it is done.

Fixed-Length or Fixed Block Deduplication

Variable-Length or Variable Block Deduplication

Content Aware or application-aware deduplication

2- Based on the Process, or when it is done.

In-line (or as I like to call it, synchronous) de-duplication

Post-process (or as I like to call it, asynchronous) de-duplication

3- Based on the Type, or where it happens.

Source or Client side Deduplication

Target Deduplication

My next post will discuss, in detail about these dedupe technologies and process.