Category Archives: HP Blades

Emulex Firmware upgrade in HP Bladecenter C7000

For System admins and VMware admins it’s a common task to update the Firmware of HBA, BIOS etc in the servers. I would like to share the steps involved in upgrading the HP FlexFabric Converged Adapters in the Blades, the HP Flexfabric adapters are mainly from Emulex OneConnect Universal CNA (UCNA) Adapter family.

These are CNA (Converged Network Adapters ) are 10GbE adapters which can handle both FC-SAN traffic via Fibre Channel over Ethernet (FCoE) and Ethernet Traffic in the same physical card. In short SAN & LAN traffic though a single adapter !!

In the HP blades these adapters are embedded on the system motherboard as a LOM (LAN on motherboard) or it will be as Mezzanine adapters. As VMware release the ESXi versions frequently we have to maintain the Firmware/Driver level as per the VMware HCL and as per HP VMware recipe. Here I am doing the Firmware upgrade for HP NC553i 10Gb 2-port FlexFabric Converged Network Adapter below are the main steps

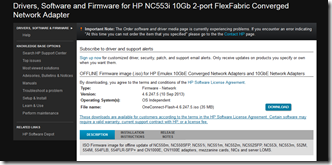

1- Download the Emulex latest OFFLINE Firmware image – OneConnect-Flash-4.6.247.5.iso (35 MB) from this LINK

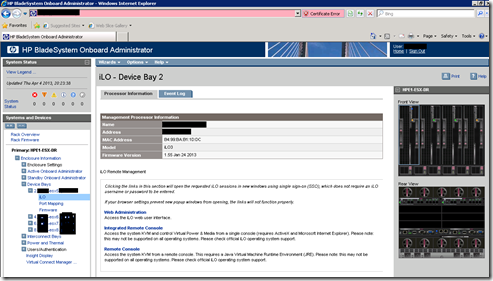

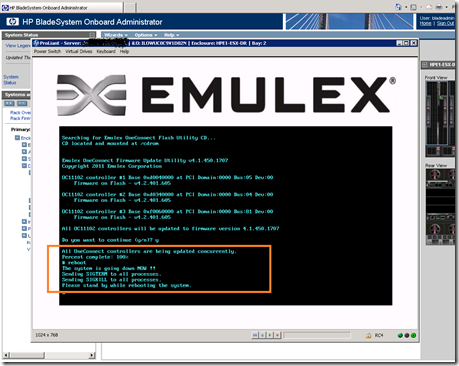

2- Login to the HP Bladecenter Enclosure on board administrator (OA) and open the ILO (Integrated Lights-Out) console of that Blade

– it is good to use the “Integrated Remote Console” option for the ILO so that you will directly get the ILO console in one mouse click or just use the normal “Web administration”

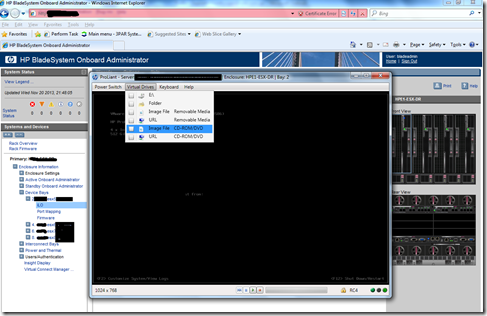

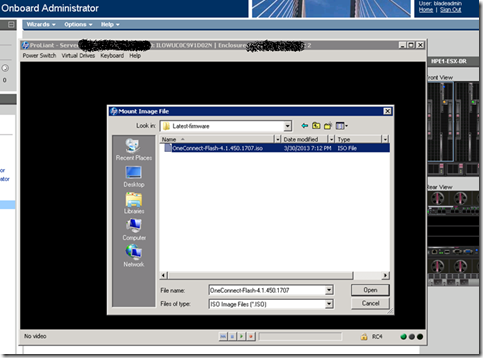

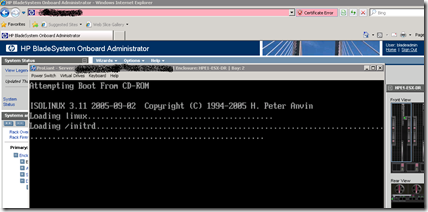

3- In the ILO console mount the Emulex firmware ISO image and reboot the Blade and boot from the CD, ensure the first boot device is DC/DVD.

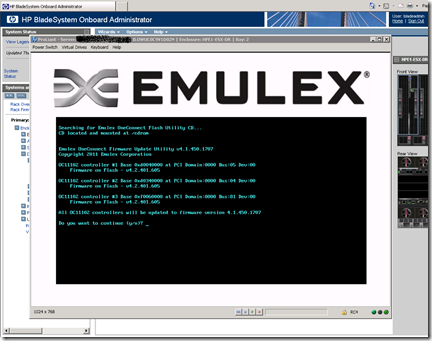

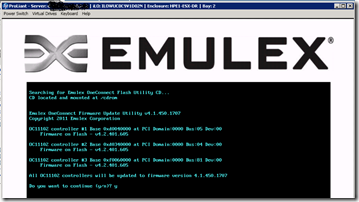

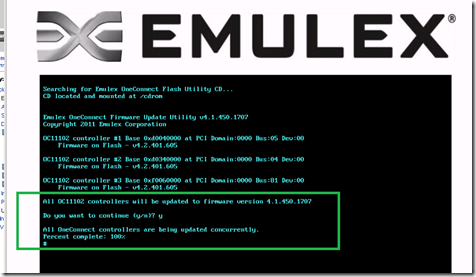

4- Once you booted from the CD you will get the EMULEX OneConnect flash utility console, and type the option “y” in the command prompt.

5- Once the firmware upgrade is SUCCESSFUL then it will show percentage complete 100%

6- Now reboot the Blade and unmount the ISO image from the ILO virtual drive, In the command prompt type reboot

Now enjoy the rest of the day with a cup of Coffee !!

Oracle RAC Cluster in VMware vSphere : VMotion Caveat

Last week I faced a very critical issue with our Oracle RAC cluster in vSphere 5, for the ESXi maintenance we have performed a VMotion on the Oracle nodes – then the FUN began !!

OUR ENVIRONMENT

We have 2 node oracle RAC cluster running on Vsphere5

For the Oracle RAC cluster we are using VMFS5 file system, I mean VMDK only (no RDM)

Oracle nodes are using windows 2008 R2 SP1 and oracle 11.2.0.3

The clusters are running on HP Blade center C7000 with ProLiant BL680c G7 blades

The ORACLE private and public network are using vDS (VMware distributed switch)

Storage, we are using HP 3PAR FC SAN for the vsphere5

ISSUE

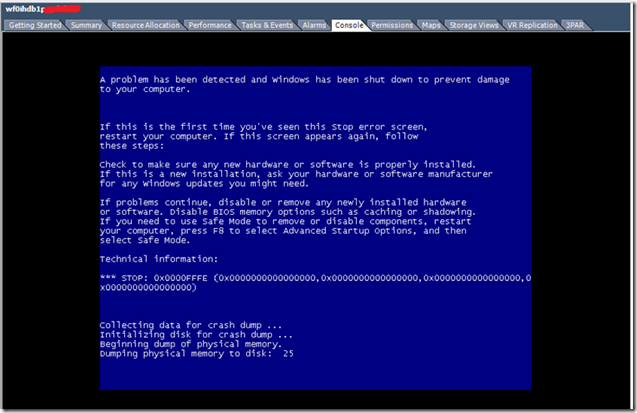

We performed a VMotion on Oracle RAC cluster nodes one by one, after that we got a BSOD for both nodes and the VM’s crashed completely.

I spent some time in researching the stop code that was in the BSOD: STOP 0x0000FFFE

It appears that it is not a normal blue screen, as the above stop code is not given from Microsoft. It is actually a forced blue screen from Oracle. Unfortunately, I am unable to find specific information regarding it from Oracle’s website, but I did find the following blog article (this information may not be completely reliable as it is just a blog article):

“OraFence has a built-in mechanism to check it was scheduled in time. If it is not scheduled within 5 seconds it will also reboot the note. In this way, OraFence is designed to fence and reboot a node if it perceives that a given node is ‘hung’ once its own timeout has been reached. Note that the default timeout for the OraFence driver is a (very low) 0x05 (5 seconds). What this means is that if the OraFence driver detects what it perceives to be a hang for example at the operating system level and that hang persists beyond 5 seconds, it’s possible that the OraFence driver – of its own accord – will fence and evict the node.” —

http://dbmentors.blogspot.com/2012_09_01_archive.html

RCA for the ISSUE :

The above article suggests that a simple timeout could cause an Oracle driver to BSOD the machine with the stop code 0x0000FFFE. This would be something that could occur during a vMotion simply because, during the VMotion the operating system is quiesced, and depending upon the VM RAM and VMotion configuration it will take around 16 seconds to complete the VMotion (we have configured MultiNic VMotion in the HP Blades)

Oracle uses fencing mechanism for the RAC cluster, just like the SCSI fencing in the REDHAT CLUSTER to reboot the nodes. Because of the VMotion quiescence process and with default timeout for the OraFence driver 5 seconds, because the VMotion quiescence took more than 5 seconds and this caused the BSOD.

The above blog article recommends increasing the timeout from 5 seconds because that is very aggressive.I would recommend contacting Oracle Support with the details, as they would probably have a more complete picture of how their product forces a BSOD and how to avoid it in the future.

RESOLUTION :

Increase the default timeout for the OraFence driver, so the BSOD should not occur again due to a quiesced operating system (during VMotion or in DRS/HA environment)

Design Notes

While designing the Oracle RAC cluster in vSphere, taking this consideration will avoid such crashes. Also if you are running the RAC nodes in the VMware HA/DRS environment, this should be considered.

ESXi VMFS Heap size Blockade – For Monster Virtual Machines in Bladecenter Infrastructure

Recently I faced a big issue with my client, we are migrating VM’s from old HP Proliant servers to HP Bladecenter, we are using

vSphere5, HP BL680c G7 Blades (Full-height, double-wide blade – 512GB RAM, Quad socket), HP 3PAR V400 FC SAN

The VM’s are very huge in VMDK level, some of them are 10TB and most of the others are in the range 2 to 3 TB VMDK. We have moved around 140 VM’s (35 per ESXi host).

After that we are not able to do VMotion, DRS, SVMotion and we are not able to create new VM’s.

I believe those who are are going/planning to achieve more consolidation ratio in their datacenter with vSphere, will definitely face this issue. Now a days the HP/IBM Bladecenters/ IBM PureFlex etc dominates the big Datacenters and also the Specs of the Rack servers, RAM size etc are huge. So while doing the new vSphere implementation or Design, this factor has to be considered well in advance.The errors we got are;

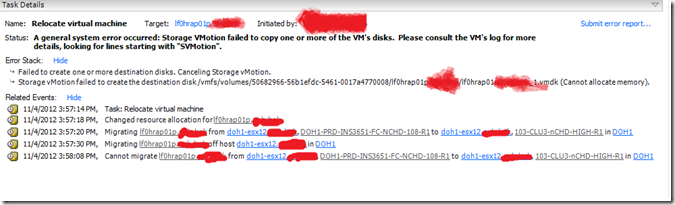

During SVmotion –

Relocate virtual machine lf0hrap01p.xxxx A general system error occurred: Storage VMotion failed to copy one or more of the VM ‘s disks. Please consult the VM’s log for more details, looking for lines starting with “SVMotion”.

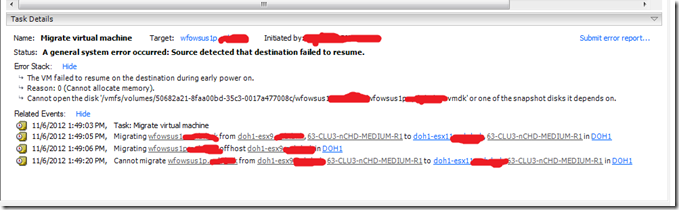

During VMotion –

Migrate virtual machine wfowsus1p.xxxx A general system error occurred: Source detected that destination failed to resume.

During HA –

After virtual machines are failed over by vSphere HA from one host to another due to a host failover, the virtual machines fail to power on with the error:

vSphere HA unsuccessfully failed over this virtual machine. vSphere HA will retry if the maximum number of attempts has not been exceeded. Reason: Cannot allocate memory.

During manual VM migration –

When you try to manually power on a migrated virtual machine, you may see the error:

The VM failed to resume on the destination during early power on.

Reason: 0 (Cannot allocate memory).

Cannot open the disk ‘<<Location of the .vmdk>>’ or one of the snapshot disks it depends on.

You see warnings in /var/log/messages or /var/log/vmkernel.log similar to:

vmkernel: cpu2:1410)WARNING: Heap: 1370: Heap_Align(vmfs3, 4096/4096 bytes, 4 align) failed. caller: 0x8fdbd0

vmkernel: cpu2:1410)WARNING: Heap: 1266: Heap vmfs3: Maximum allowed growth (24) too small for size (8192)

cpu4:1959755)WARNING:Heap: 2525: Heap vmfs3 already at its maximum size. Cannot expand.

cpu4:1959755)WARNING: Heap: 2900: Heap_Align(vmfs3, 2099200/2099200 bytes, 8 align) failed. caller: 0x418009533c50

cpu7:5134)Config: 346: “SIOControlFlag2” = 0, Old Value: 1, (Status: 0x0)

Resolution :-

The reason for this issue is, with the default installation/configuration of ESXi host, there is a limitation in the VMkernel, to handle the Opened VMDK files in the VMFS file system.

The default heap size in ESXi/ESX 3.5/4.0 for VMFS-3 is set to 16 MB. This allows for a maximum of 4 TB of open VMDK capacity on a single ESX host.

The default heap size has been increased in ESXi/ESX 4.1 and ESXi 5.x to 80 MB, which allows for 8 TB of open virtual disk capacity on a single ESX host.

From the VMware KB article 1004424 it is explained and the steps to resolve this issue.

We need to change the VMFS heap size of the ESXi host to 256 MB, and reboot the host.

NOTE :

– In the article it is mentioned VMFS3 and in the ESXi5 host advance configuration also you only see the VMFS3, but this applies to VMFS5 also. We confirmed from the VMware technical support.

– The VMFS Heap is a part of the kernel memory, so increasing this will increase memory consumption of the kernel which results in shortage of memory for other VM’s on the system.

HP Virtual Connect (VC) Network Traffic Layout

Here we are going to discuss a scenario, where I need to divide the entire network traffic which is going inside the HP blades and HP VC in a HP Blade system C7000

Hardware Details

– HP Enclosure = BladeSystem C7000 Enclosure G2

– HP Blades = BL680c G7

– HP Virtual Connect Flex Fabric

– Dual Port FlexFabric 10Gb Converged Network Adapter

– Dual Port FlexFabric 10Gb Network Adapter

Network Traffic Details

– VMware Vcenter

– FT and VMotion

– Oracle RAC Cluster

– Production Application servers

– DMZ1 (Production Web Traffic)

– DMZ2 (Production Database Traffic)

– Corporate Servers

With the above network traffic classification, these need to be separated due to the huge network load and considering security aspect also. This is one of the scenario which I came across while designing VSphere 5 with HP 3PAR and HP C-Class Blade Center for a leading Bank.

So there is no hard and fast rules, you can divide accordingly based on the requirement.

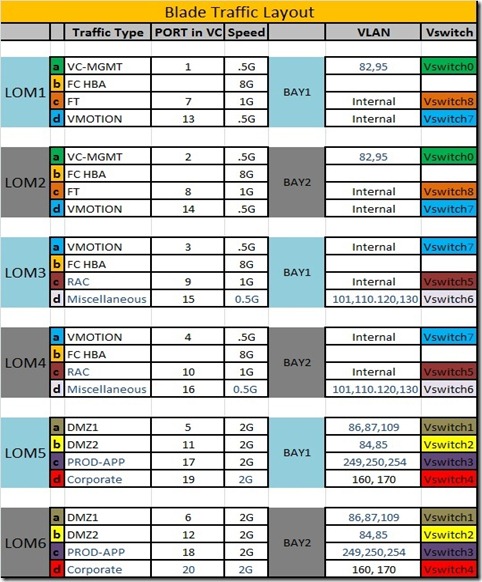

Table-1 is the network traffic division.

More Details-

Each blade is having 3 x Dual port 10Gig FlexFabric adapter on board. So total there is 6 x 10Gig ports, they are called LOM (Lan On Motherboard) ports. That is LOM1 to LOM6, and each LOM is internally further divided in to 4 adapters, and these 4 adapters share a common bandwidth that is they can have maximum of 10G. And we can divide the traffic inside for each LOM, that is the beauty of the FlexFabric adapters.

Here LOM1 to LOM4 are 10G FlexFabric Converged adapters (FCOE), so each LOM have one FC port and this is used for the SAN traffic. LOM5 and LOM6 are normal 10G FlexFabric adapters.

There are 2 HP Virtual Connect (VC) modules in the enclosure, they are connected to the BAY1 and BAY2, for redundancy LOM1, LOM3 and LOM5 is internally connected to the BAY1 and LOM2, LOM4, LOM6 to BAY2. There will one uplink for network and one uplink for FC Switch (SAN) for each VC, this will give the redundancy, HA, and load balancing and both VC are in Active/Active mode. So each traffic will have at least 2 adapters 1 from each LOM, this will give the redundancy, HA, Load balancing etc.

The VC is simply a Layer 2 network device, it wont do the routing.

NOTE-

Here the VMotion and FT traffic flow is happening inside the blade center Back Plane it self, and is not going to the VC or external core switch.

This is a specific scenario, here each blades inside the enclosure is configured together as one ESXi Cluster and so there is no need to do VMotion or FT outside the Blade. Here the advantage is that the VMotion and FT traffic wont overload the VC or core switch.